For my first quantum hackathon I integrated a custom Variational Quantum Eigensolver (VQE) into portfolio optimization to enhance scalability and performance on noisy intermediate-scale quantum (NISQ) devices. I introduced what I call a “Shot-Efficient” Simultaneous Perturbation Stochastic Approximation (SE-SPSA) algorithm, a modification of the classical SPSA, which dynamically adjusts the number of measurement shots during optimization.

Hardware Specific Error-Correction and Noise Resistant Portfolio Optimization with Variational Quantum Eigensolver

Martin Castellanos-Cubides, I. Chen-Adamczyk, H. Hussain, D. Li, T. Girmay, and Y. Zhen

Abstract

In this project, we integrate a custom Variational Quantum Eigensolver (VQE) into portfolio optimization to enhance scalability and performance on noisy intermediate-scale quantum (NISQ) devices. We introduce a Shot-Efficient Simultaneous Perturbation Stochastic Approximation (SE-SPSA) algorithm, a modification of the classical SPSA, which dynamically adjusts the number of measurement shots during optimization. This approach effectively balances resource efficiency and accuracy, making it suitable for the constraints of current quantum hardware. By mapping the portfolio optimization problem onto a quantum Hamiltonian, we leverage quantum algorithms to find optimal asset allocations that balance risk and return. We implement hardware-specific optimizations, including dynamic decoupling and qubit mapping, to mitigate noise and decoherence effects. Our results demonstrate that the combination of custom VQE and SE-SPSA effectively navigates the noisy landscape of NISQ devices, offering promising avenues for quantum-enhanced financial algorithms.

Get PDF To See the Whole Paper!

Baby’s First Quantum Hackathon

One Hackathon, Extra Quantum Please!

Working with Real Quantum Computers

My goal for my first quantum hackathon was to do something unique and innovative. I had built a good foundation of the quantum algorithms, how they work, why they work, and where they shine up to this point, so when faced with optimizing a financial portfolio I decided I had to go further than modeling it with a standard Variational Quantum Eigensolver. The VQE simulation would be my foundation, but my real challenge would be dropping the simulation aspect and getting some real use out of it with the noisy quantum systems of the day. The first night was spent reading the literature on VQEs for portfolio optimization, and from it recreating the standard model at the time. By the next morning I had a working VQE simulation, and I began trying to cut through the noise.

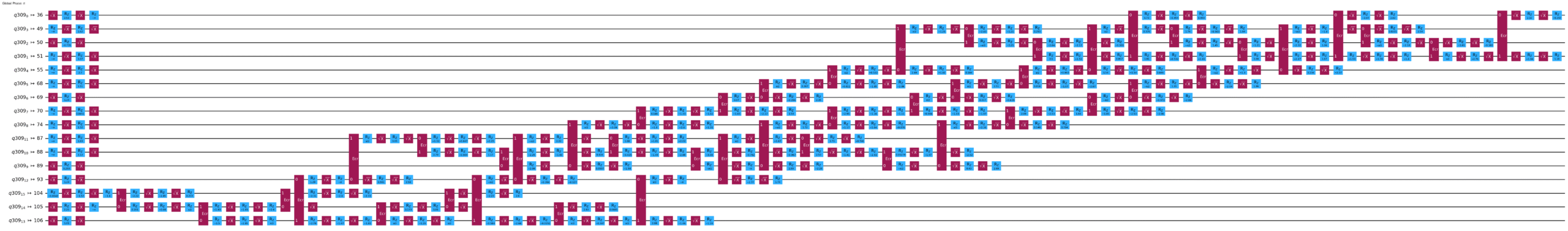

Circuit Optimization

To get results from a quantum computer you have to think like a quantum computer. That’s why the first step was optimizing the circuit to the hardware. There are a set of fundamental/native gates in quantum circuits that all other gates are built from. When working in Qiskit, IBM will take your circuit and deconstruct each of your operations into a sequence of their device’s native gates. Optimization also involves minimizing the circuit depth by finding equivalent representations of operations that use fewer gates. The degree of this optimization can be controlled, with higher levels prioritizing reducing gate count and circuit depth, while lower levels prioritize sticking to the original circuit.

An Optimized Circuit

- Circuit depth minimization

- Hardware-specific native gate decomposition

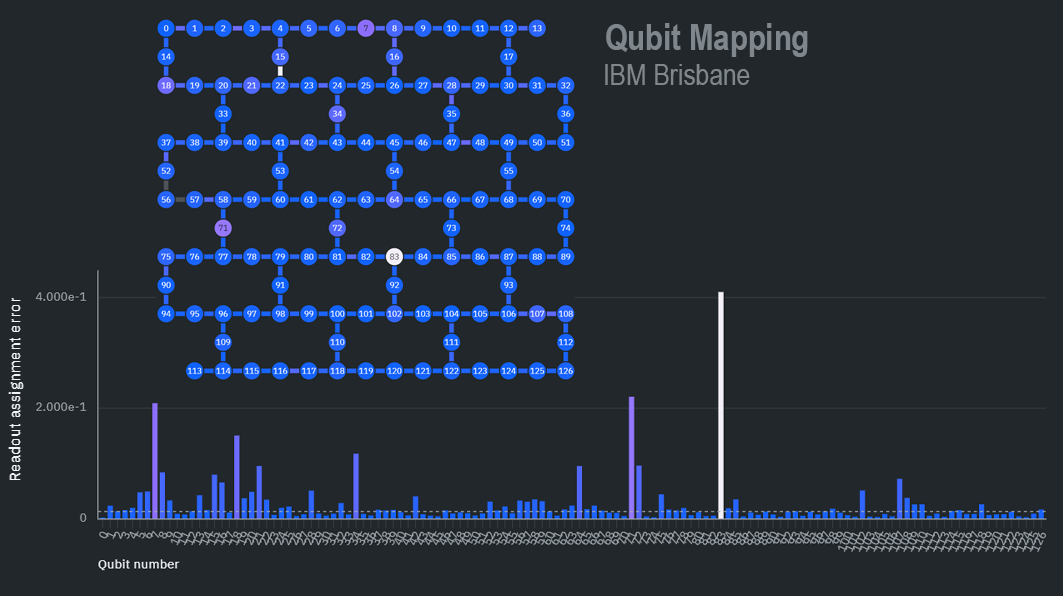

Another factor to consider is the qubit mapping. We treat quantum circuits as largely theoretical constructs with abstract logical qubits, but physical qubits are distinct components with unique properties like error rates and connectivity. This means that not only do they have varying error rates, but they’re also physically connected to each other in a specific way. These two things go double for superconducting quantum computers, where qubit fabrication is “you get what you get” and distant coupling is difficult. That is to say, when you make the superconducting qubits, variations in fabrication affect that qubit’s performance permanently. Direct coupling is also typically limited to neighbors in a superconducting circuit layout, which means non-neighbors have to make use of the SWAP gate to couple. This introduces more error for each operation. So, using qubits with high fidelity and ensuring interacting qubits are physically close will impact performance. Luckily, the use of an optimal qubit arrangement can be done through the Qiskit optimization settings!

IBM Brisbane Qubit Mapping

- Qubits with low readout assignment error

- Qubit spacing minimized for lowest error coupling

Dynamical Decoupling

When running operations on a quantum computer, an idle qubit is your enemy. As coherence naturally decreases over time (such is the way of quantum mechanics), we introduce error into the system the longer we wait between operations and ultimately our readout. There’s a distinction to be made between coherent decoherence (systematic and reversible) and decoherent decoherence (random and irreversible). Unofficial terms I just made up, but will do us well in describing the phenomenon.

When running operations on a quantum computer, an idle qubit is your enemy. As coherence naturally decreases over time (such is the way of quantum mechanics), we introduce error into the system the longer we wait between operations and ultimately our readout. There’s a distinction to be made between coherent decoherence (systematic and reversible) and decoherent decoherence (random and irreversible). Unofficial terms I just made up, but will do us well in describing the phenomenon.

The idea is that the environment forces opposite states to decohere (partly) in opposite ways. Imagine that over a time t a spin up particle will be moved to the left in a specific way. Now imagine that a spin down particle will be moved in that exact same way, just to the right. It doesn’t really matter what specific way they’re moved, it only matters that they’re symmetrical in this way. In this case, if we let a spin up particle decohere for t, then flipped it to spin down, then after time t flipped it back, we would end up with the same down state particle at the same place we started! The idea is similar, just with a wavefunction changing in a symmetrical way and not physically. And of course, this doesn’t work for any noise that isn’t symmetrical.

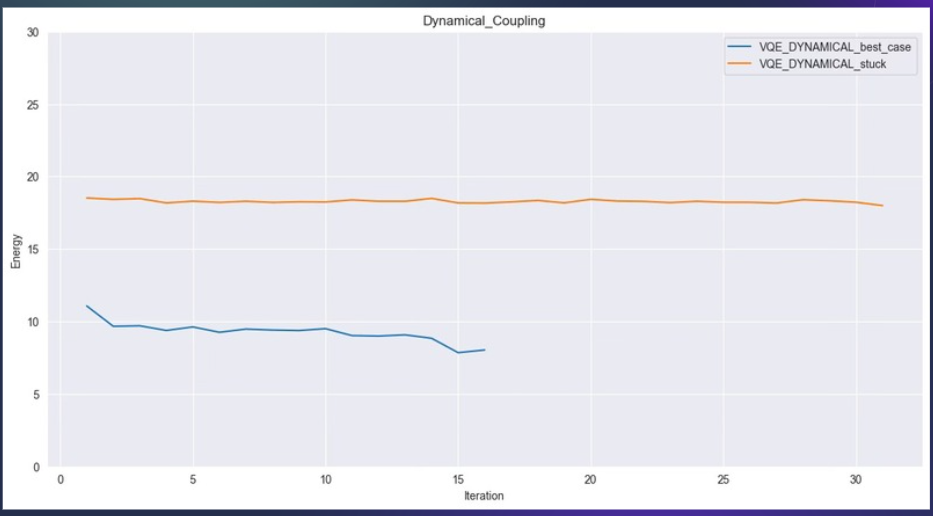

Through dynamical decoupling, we can apply a series of pulses that will repeatedly invert the current state while a qubit is idle, canceling out predictable phase noise over time! This procedure can (with some significant effort) be applied to a circuit, as I did to the VQE protocol. The sad truth however, is that companies don’t like to let us play too much with their multimillion dollar toys. And even after making 3 new IBM accounts for extra minutes on Brisbane, I could never get a run longer than 30 iterations before being kicked from the queue! And that was for a VQE run that got stuck on a local minimum. The run that was actually making progress made it to 16 iterations before getting kicked! Therefore, the results here are largely inconclusive. I would note, however, that the relative steadiness of the lines may suggest a reduction in noise. You’ll see inconclusive results from time hoarding quantum giants become a big theme for this project.

Dynamic Decoupling

- Energy evolution of the VQE with a Dynamical Decoupling protocol implemented. The orange line shows a case where the algorithm converges around a local minimum, while the blue line represents a run that continues to decrease in energy, indicating progress toward a more favorable solution.

Shot-Efficient SPSA

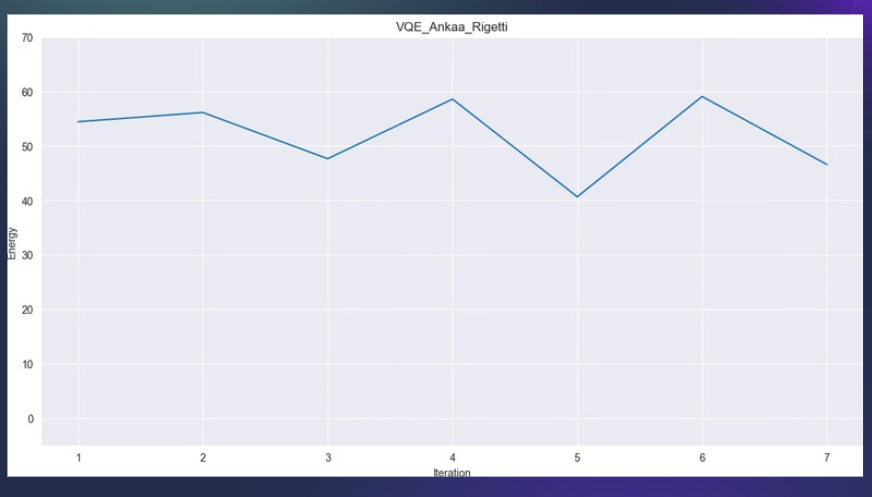

The last thing I wanted to try was running optimization on different quantum computers, since qBraid had provided all of us with credits we could use to connect to the systems of big names like Rigetti, IonQ, and QuEra. Although my first choice was QuEra because their system’s architecture practically eliminates the need for SWAP gates, the limiting factor was once again money. To run code on these quantum systems, there’s a flat cost per circuit and one for each shot of the circuit. Not all quantum systems are built equal, and these prices varied such that I could run half an iteration on QueEra’s system, or I could run 3 iterations on Rigetti’s system.

It was clear the gradient descent method wasn’t gonna work in this situation, so I broke the method down to its bare components. I knew that the amount of shots was used to approximate the probabilistic distribution of the final wavefunction. However, I realized that even with a small amount of shots, statistically you would drift towards the correct answer. You would just take a less direct path. Of course, the amount of iterations would blow up if we tried to reach that final answer through drift alone, but it comes down to a shots vs iterations trade-off. The Shot Efficient SPSA was a gradient descent that towed this line, using less shots at the beginning to get a general idea of which direction it was going, then increasing the shot count for higher iterations, as finer adjustments near the absolute minima were needed.

With this new take on the SPSA, and another 10$ of credits, I was able to go from 3 to 7 iterations on Rigetti’s system. Now, I love this graph, but realistically? Besides the novelty of these points coming from a quantum computer, at $1.42 a point I can’t say I recommend the purchase. As a man, I like to think the downwards trend is indicative of the method’s potential. As a physicist I have to let you know it’s statistically inconclusive.

SE SPSA on Rigetti's Ankaa QPU

- Results are inconclusive, not enough money #sad

The Presentation, Oh God The Presentation!

Lots of things went wrong during this presentation, and believe me I’d love to vent about my team members fumbles, or last minute changes by the organizers that screwed us, but I find in these situations the best thing to do is focus on what I did wrong.

The Speech

For the presentations before us, and what would be all the presentations after us, I realized no one had tried using a quantum computer. In fact, no one had even ventured outside of recreating what they had seen in the provided literature. So, I introduced the project with a (admittedly, maybe self-aggrandizing) speech on how we’re in the quantum age, and how we’re not going to get anywhere if we don’t try new things. If we sat around waiting for quantum computers to get better instead of trying to work with them now, we could be leaving a lot of optimization development on the table. Although the presentation plan had enough room for it in theory, ultimately I had to rush through major points and got cut off right around the SE-SPSA results. The lesson I took away: let the work speak for itself*

The Team

I like to fly solo, or I should say I’m used to it. I carried the weight of this entire project on my back, but there was one group (different category) that you could tell had each done their own part of the project. The way they communicated what they had done, what they understood about it, you could that everyone was pulling their weight. Although it’s true they each hadn’t done anything new, the sum of their parts bridged the different literature they were following and created an undeniable value. I was impressed, and I had underestimated the value of coming in with a good team instead of entering as an individual and being paired up with people whose strengths and weaknesses I didn’t know. I had to put together a team.

Foreshadowing: The Value of an Idea

There was another lesson I could extrapolate from this experience, the asterisk next to the first lesson. I couldn’t see the full picture however, it would take some more trial and error at MIT iQuHACK 2025.

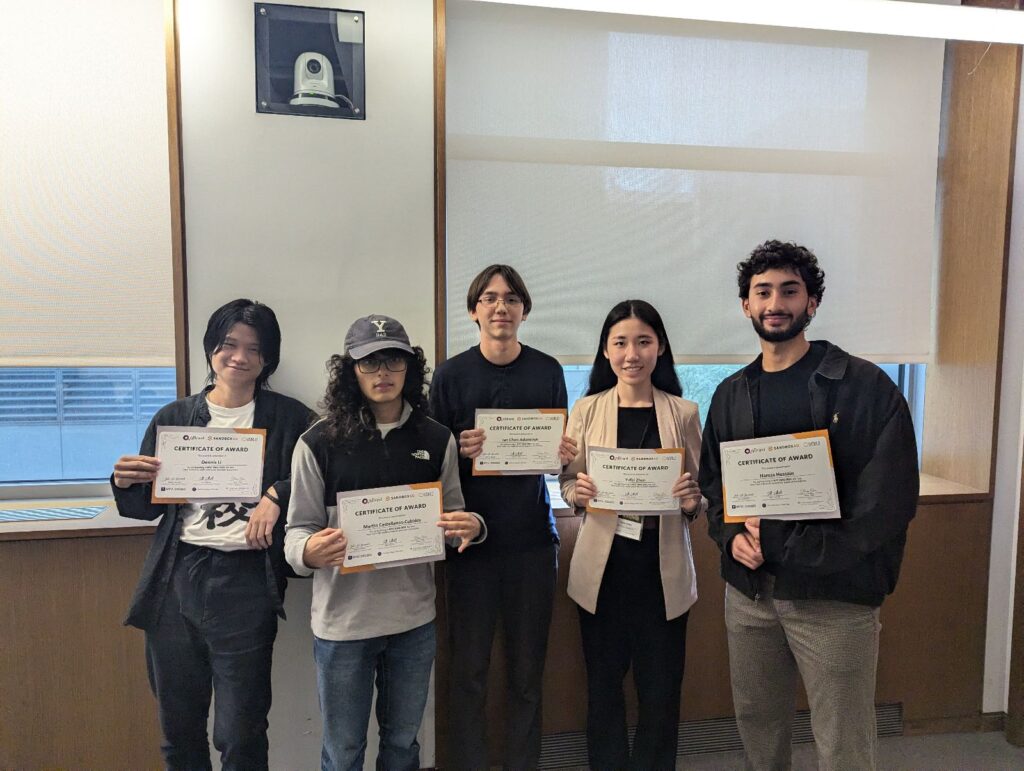

Group Pictures

Fun Fact

I stayed up three nights straight on Methamphetamines finishing this project!